Using private container image registries is a common practice in the industry, as it ensures applications packaged within the images are just accessible for users which hold the right set of credentials. Besides, in some cases, using credentials for some registries helps overcoming pull rate limitations, for example when using paid subscriptions for DockerHub.

Container ship by Sketchfab

The authentication process for image registries is pretty straightforward when using a container runtime locally, like Docker. In the end, all you need to do is setting your local configuration to use the right credentials. In the case of Docker, it can be done running the login command. However, when it comes to a Kubernetes cluster, in which each node runs its own container runtime, this process can become way more complex.

Pulling private images 📦🔒

When we want to deploy images from private container registries in Kubernetes, first we need to provide the credentials so that the container runtime running in the node can actually authenticate before pulling them. The credentials are normally stored as a Kubernetes secret of type docker-registry, which stores the json-formatted authentication parameters in a base64 string.

kubectl create secret docker-registry mysecret \

-n <your-namespace> \

--docker-server=<your-registry-server> \

--docker-username=<your-name> \

--docker-password=<your-password> \

--docker-email=<your-email>

These credentials can be then used in two ways:

- Referencing the secret name with the imagePullSecrets directive on the pod definition manifest, like in the snippet below. In this case the credentials will be just used for the pods that reference them.

apiVersion: v1

kind: Pod

metadata:

name: foo

namespace: default

spec:

containers:

- name: foo

image: janedoe/awesomeapp:v1

imagePullSecrets:

- name: mysecret

- Attaching them to the default service account of a namespace. In this case, all the pods of the namespace will use the credentials when pulling the image from the private registry.

kubectl patch serviceaccount default \

-p "{\"imagePullSecrets\": [{\"name\": \"mysecret\"}]}" \

-n default

The second way of using the credentials, can provide cluster wide authentication, but in order to make it work, the cluster manager needs to create the secret in each namespace and patch the default service account of every namespace. Plus, if new namespaces are created, they are not automatically updated, so new secrets will need to be added and the service accounts patched.

Here is where the tool we will be reviewing comes in handy.

Imagepullsecret-patcher 🤖🔒

Imagepullsecret-patcher is an open source project by Titansoft, a Singapore-based software development company. This solution eases setting cluster wide credentials to access image registries.

It is implemented as container image which contains a Kubernetes client-go application, which talks to the Kubernetes API to create an imagePullSecret on each namespace and patches the default service account of the namespace so that it uses the secret. Furthermore, every time a new namespace is created, the process is automatically applied on it.

NOTE: There might be some special cases in which you may not want a namespace to use the cluster wide imagePullSecrets (i.e. security reasons), but no worries, the patcher can be disabled for specific namespaces by adding a special annotation to the namespace.

k8s.titansoft.com/imagepullsecret-patcher-exclude: true

Deploying it ➡️☸️

First, let’s create a specific namespace for the pullsecrets patcher. You can do it by issuing the following command.

kubectl create namespace imagepullsecret-patcher

Once the namespace is created, you need to create a secret containing your registry credentials. In order to do so, execute the following command. If you use Dockerhub as your registry, use https://index.docker.io/v1/ in the --docker-server field.

kubectl create secret docker-registry registry-credentials \

-n imagepullsecret-patcher \

--docker-server=<your-registry-server> \

--docker-username=<your-name> \

--docker-password=<your-password> \

--docker-email=<your-email>

Finally, deploy the rest of resources that the patcher needs to work. The following command will deploy a ClusterRole with permissions to create and modify secrets and as service accounts, as well as a service account for the patcher itself. They are mapped by means of a ClusterRoleBinding. Finally, it will create a deployment which mounts the secret you created manually and uses the service account.

kubectl apply -f https://raw.githubusercontent.com/mifonpe/pullsecrets-cluster-demo/main/manifests.yaml

You can check all of the resources accessing this file on the example repo.

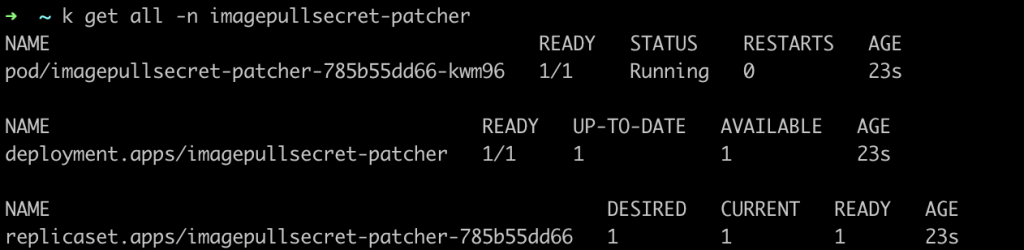

Once the deployment is up, it’s time to check if it worked. If you check the resources in the imagepullsecret-patcher namespace, you should see something like this.

Using it ⚙️☸️

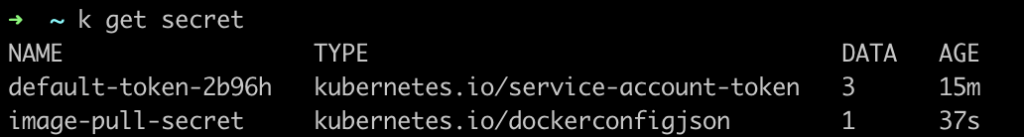

Once the installation has succeeded, you can check the default namespace secrets. You should be able to see the secret created by the patcher.

NOTE: k is an alias for kubetcl

Finally, if you check the default service account on the default namespace, it should have been patched in order to use the new secret.

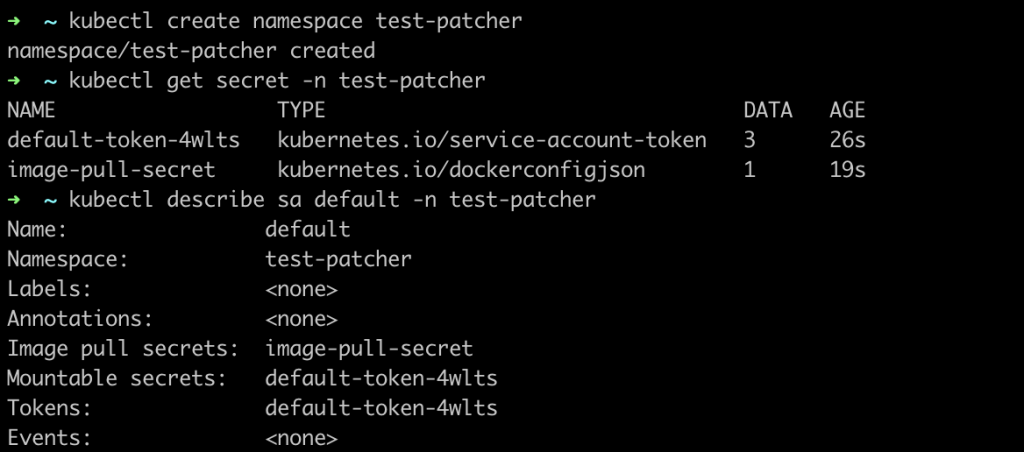

Let’s create a new namespace to check how it works. Execute the following command to create a new, empty namespace.

kubectl create namespace test-patcher

Now let’s execute the same commands that were executed in the default namespace. You will see how the secret was created by the image patcher and that the default service account was patched.

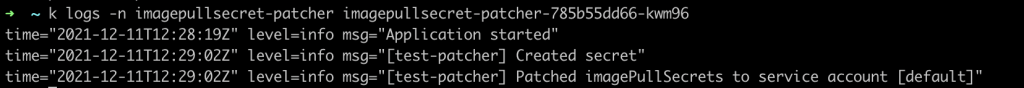

If you check the logs of the patcher container, you can see how it detected the newly created namespace and reacted to this event.

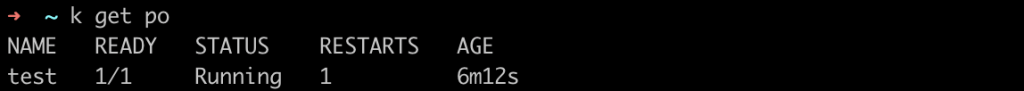

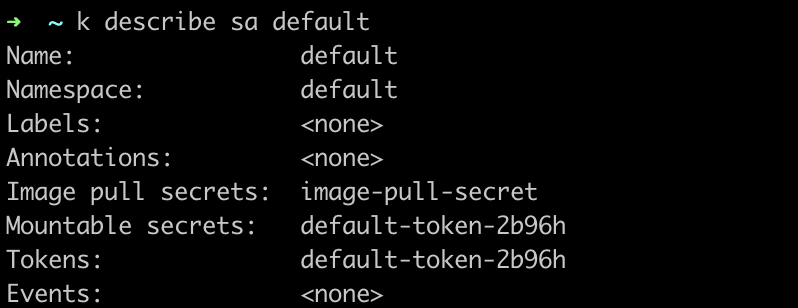

Finally, let’s pull a private image. For this example I will be pulling a private image from Dockerhub that was constructed for another post of the blog.

In order to create a deployment which instantiates a pod using your private image, you can use the following command.

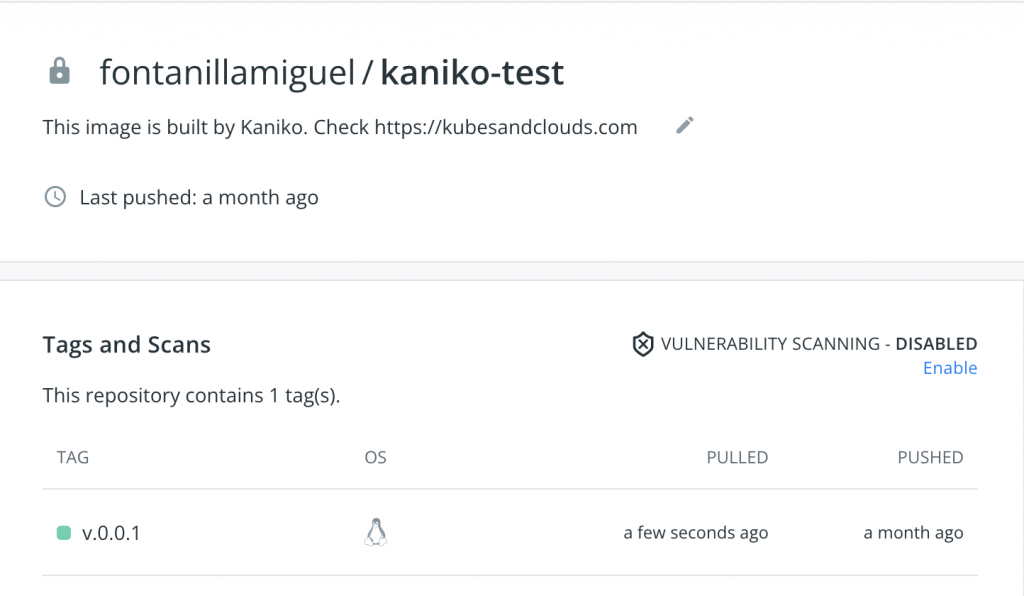

kubectl run test --image <your-private-image>:<your-tag>

Check if the pod is running.

If you describe the pod, you should be able to see how the private image was pulled by the cluster!

How to make it better? ➕🤖

In my personal case, I manage most of the cluster add-ons and components using GitLab CI and Helmfile (in case you never heard of it, you can find an article about Helmfile here). My objective with this approach is to avoid any manual intervention on the cluster, so that bootstrap and disaster recovery procedures can be fully automated.

Thus, I install the patcher as a Helm release, and inject the variables from GitLab CI, avoiding manual secret creation or credentials leakage. Furthermore, I use Helm templating functions to inject the authentication string coming from the CI Variables. The snippet below shows how to implement this exact solution for the secret.

This solution can be extended to any CI/CD platform. The credentials are just configured once within your secrets storage or external secret vault and the CI will take care of deploying it into your cluster or set of clusters.

apiVersion: v1

kind: Secret

type: kubernetes.io/dockerconfigjson

metadata:

name: image-pull-secret-src

namespace: imagepullsecret-patcher

data:

.dockerconfigjson: